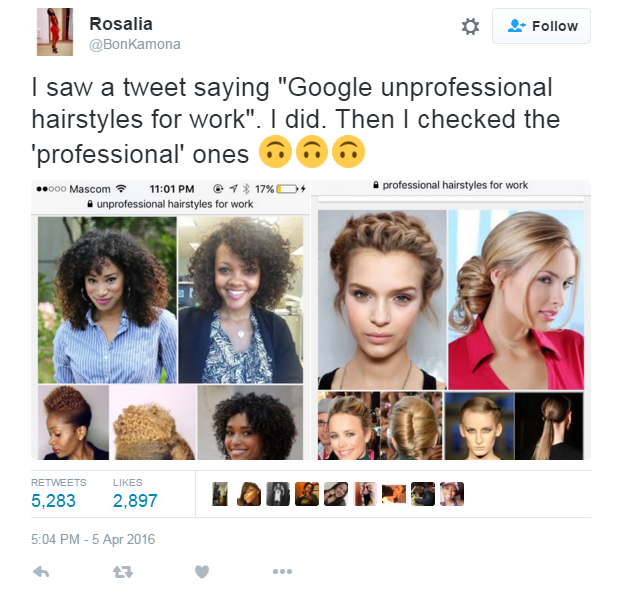

I knew I wouldn’t be able to look at Google the same way after reading this book, but I didn’t expect it to be this deeply systemic, pervasive, and quantifiable. Every screenshot Safiya Noble presents in Algorithms of Oppression: How Search Engines Reinforce Racism serves to visually present her arguments in ways that are hard to reject or ignore.

I, like many of my peers, was struck by the chapter on Dylann Roof. I think we are generally complacent in our consumption of Google results—it’s easy and, more importantly, comfortable for us to assume that the order of results from a Google search is the accurate one. I can generally trust that I’ll find a definition, Wikipedia page, or news article pretty early on when I search for things. Naturally, I would also expect that Googling things gives me the objective best results first, followed by more distant others (unless I’m incredibly desperate, I almost never go past the first Google page—why bother?). It’s becoming increasingly clear to me that the idea of “best results” coming early is an entirely subjective idea, and one that’s heavily influenced by Google’s algorithms and the manipulation of those algorithms by those tagging websites or articles that lean radically white supremacist. “Best” is an unquantifiable term, a deeply human and subjective idea, and the concept that it can be judged without bias from a system that is created by people with inherent biases is problematic.

I think a major problem is ease of access. If I Google a fact, I’m going to trust the first thing I see, likely without understanding the biases of the website, the contributor who supplied the fact, or the levels of fact checking that went into the information provided. In a lot of ways, this is the only possible outcome of access to something like Google—ease of access makes us desperate for speed and accuracy, and if the speed is there, we assume accuracy is too. It’s kind of bleak, to be honest. I’m not sure I know how to shift the behaviors and attitudes that continue to support the accessibility and presence of white supremacist, racist information available online.

Ultimately, I think it comes down to a very human desire for rationality, which is not a very human characteristic. We are always swayed or convinced by emotion and desire—I would argue that people aren’t inherently rational ever, no matter how hard they try. It’s very tempting to believe that we, as humans, can build something that’s completely rational, and I think we have trusted the notion that technology, algorithms, and Google searches are emotionless, unbiased things. But we all know that Google targets based on advertisements and previous search history, and algorithms and technology are formed by people who aren’t thinking rationally in the first place. I want to trust something to think clearly and without bias for me when I search things or write things, and it’s quite demoralizing knowing more and more that that desire is unreachable as of yet.

I would actually really love Nobel’s take on IBM Watson – the same A.I. that beat Ken Jennings at Jeopardy – and how she feels about it. I’m not aware of if Watson has any bias with my limited experience working with it, but I think as A.I. and algorithms evolve, it would be interesting to see how everything develops.

LikeLike